Blog / Amazon reviews scraping services help extract various datasets to monitor competition & market trends to maintain a smooth workflow and processes.

30 Jan 2024

In 2024, scraping data from Amazon can help businesses and people who want to do well in online selling. Users can easily collect essential details about Amazon products with special tools and APIs. This helps them know more about what's selling and make smarter choices to stay ahead of others who are selling similar things.

Amazon is one of the world's most popular online shopping platforms. Analysts and organizations rely on the data available on the platform to analyze e-commerce trends. They also use this data to understand customer behavior and gain a competitive advantage. One of the most common forms of data extracted from the Amazon website by experts of Reviewgators is user reviews. This data is essential for sentiment analysis and competition monitoring.

Amazon reviews scraping means using automated tools to get information from Amazon's website. This information can include product listing, product pricing, reviews and ratings. Amazon Reviews Scraper is a web scraping tool that allows you to scrape product reviews from Amazon using product URLs. The main goal is getting relevant data, it's about getting valuable data from Amazon to help make better decisions.

Users gain access to Amazon's internal workflows to obtain information from their reviews. They take advantage of this to retrieve the necessary data from the reviews section. The aim is to gather this information for several purposes. It includes conducting market research, assessing customer sentiment, and examining product reviews. Additionally, Web scraping Amazon reviews data provides information that helps businesses make decisions.

Amazon Data Scraping requires specific knowledge, which can be done by following a specific process.

bs4: Beautiful Soup (bs4) is a Python package for extracting data from HTML and XML files. Python does not include this module by default. To install this, do the following command in the terminal.

pip install bs4

requests: Request allows you to send HTTP/1.1 requests with ease. Python does not include this module by default. To install this, do the following command in the terminal.

pip install requests

To begin web scraping, we must first complete certain preliminary steps. Import all essential modules. Get the cookies data before sending a request to Amazon; without it, you would be unable to scrape. Create a header with your request cookies; without cookies, you cannot Scrape Amazon Data and will always receive an error.

Pass the URL into the getdata() function (User Defined Function), which will make a request to a URL and return a response. We are using the get function to obtain data from a specific server via a given URL.

Syntax: requests.get(url, args)

Convert the data to HTML code, which is subsequently parsed using bs4.

Syntax: soup = BeautifulSoup(r.content, ‘html5lib’) Parameters: r.content : It is the raw HTML content. html.parser : Specifying the HTML parser we want to use.

Now, use soup to filter the needed data. The Find_All function.

# import module

import requests

from bs4 import BeautifulSoup

HEADERS = ({'User-Agent':

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) \

AppleWebKit/537.36 (KHTML, like Gecko) \

Chrome/90.0.4430.212 Safari/537.36',

'Accept-Language': 'en-US, en;q=0.5'})

# user define function

# Scrape the data

def getdata(url):

r = requests.get(url, headers=HEADERS)

return r.text

def html_code(url):

# pass the url

# into getdata function

htmldata = getdata(url)

soup = BeautifulSoup(htmldata, 'html.parser')

# display html code

return (soup)

url = "https://www.amazon.in/Columbia-Mens-wind-\

resistant-Glove/dp/B0772WVHPS/?_encoding=UTF8&pd_rd\

_w=d9RS9&pf_rd_p=3d2ae0df-d986-4d1d-8c95-aa25d2ade606&pf\

_rd_r=7MP3ZDYBBV88PYJ7KEMJ&pd_rd_r=550bec4d-5268-41d5-\

87cb-8af40554a01e&pd_rd_wg=oy8v8&ref_=pd_gw_cr_cartx&th=1"

soup = html_code(url)

print(soup)

Output:

Note: This exclusively contains raw data or HTML code.

Now that the basic setup is complete let us look at how scraping for a specific need may be done.

Scrape customer name

Find the customer list using the span tag and class_ = a-profile-name. To check the appropriate element, open the webpage in the browser and right-click, as shown in the picture.

To use the find_all() method, you must give the tag name and attribute along with their respective values.

def cus_data(soup):

# find the Html tag

# with find()

# and convert into string

data_str = ""

cus_list = []

for item in soup.find_all("span", class_="a-profile-name"):

data_str = data_str + item.get_text()

cus_list.append(data_str)

data_str = ""

return cus_list

cus_res = cus_data(soup)

print(cus_res)

Output:

[‘Amaze’, ‘Robert’, ‘D. Kong’, ‘Alexey’, ‘Charl’, ‘RBostillo’]

Scrape user review:

Now use the same procedures as before to discover the customer reviews. Find a unique class name using a specified tag, in this case, div.

def cus_rev(soup):

# find the Html tag

# with find()

# and convert into string

data_str = ""

for item in soup.find_all("div", class_="a-expander-content \

reviewText review-text-content a-expander-partial-collapse-content"):

data_str = data_str + item.get_text()

result = data_str.split("\n")

return (result)

rev_data = cus_rev(soup)

rev_result = []

for i in rev_data:

if i is "":

pass

else:

rev_result.append(i)

rev_result

Scraping Review Image:

Scraping Review Images: Use the same procedures as previously to get picture links from product reviews. The tag name and attribute are provided to findAll(), as seen above.

def rev_img(soup):

# find the Html tag

# with find()

# and convert into string

data_str = ""

cus_list = []

images = []

for img in soup.findAll('img', class_="cr-lightbox-image-thumbnail"):

images.append(img.get('src'))

return images

img_result = rev_img(soup)

img_result

Saving information into a CSV file:

In this case, the information will be saved into a CSV file. The data will first be transformed into a dataframe, and then it will be exported into a CSV file. Let's look at how to export a Pandas DataFrame to a CSV file. We'll use the to_csv() method to save a DataFrame as a CSV file.

Syntax : to_csv(parameters) Parameters : path_or_buf : File path or object, if None is provided the result is returned as a string.

import pandas as pd

# initialise data of lists.

data = {'Name': cus_res,

'review': rev_result}

# Create DataFrame

df = pd.DataFrame(data)

# Save the output.

df.to_csv('amazon_review.csv')

The reviews are a window into the market's pulse, revealing essential clues that unlock the secrets to customer satisfaction. The benefits of Amazon Data Scraping help guide businesses toward informed decisions and product improvements.

Amazon review scraping, which is a great place to find information. They tell us what customers think, whether they are happy, unhappy, or have suggestions. This data is critical to organizations because it shows what consumers like, hate, and want from products.

Product evaluations usually offer helpful advice. This guidance helps businesses enhance their products. It functions similarly to a guidebook and aids companies in resolving issues, streamlining procedures, and outpacing rivals by giving customers what they desire.

Amazon reviews scraping lets you play detective. You can see how your product stacks up against competitors.It's similar to attempting to score more touchdowns by studying the playbooks of your competitiors. Web scraping Amazon reviews gives you a competitive edge and access to insightful customer data in addition to data collection.

Exploring Amazon's treasure trove of product reviews unveils a goldmine of invaluable insights. Each review acts as a guide, leading to an understanding of customer preferences, desires, and grievances. Scraping through these reviews offers a clandestine peek into the minds and emotions of consumers, akin to discreetly overhearing a candid conversation where customers candidly share their joys and frustrations.

Scraping publicly accessible information, such as product ratings, review summaries, or the quantity of responses to a specific review, is lawful. All you have to do is use caution while handling personal information, especially the reviewer's name and avatar, since these could be used to determine who the user is. Unauthorized website scraping is regarded as a breach of Amazon's regulations, which are designed to safeguard company data and intellectual property.

According to the company's Conditions of Use, users are not permitted to use automated tools, including bots or scrapers, to access or gather data from Amazon's website without express permission. Generally, as stated in the relevant portion of Amazon's Conditions of Use:

"You may not use data mining, robots, screen scraping, or similar data gathering and extraction tools on this site, except with our express written consent."

Unauthorized review scraping on Amazon can have legal repercussions, including possible legal action from Amazon against the scraper. Review the terms of service of the website in question, ask for permission, or use any authorized Application Programming Interfaces (APIs) the platform offers if you have a specific use case for collecting reviews on Amazon or any other website. To guarantee adherence to the policies and guidelines of the website you plan to scrape, always prioritize legal and ethical considerations.

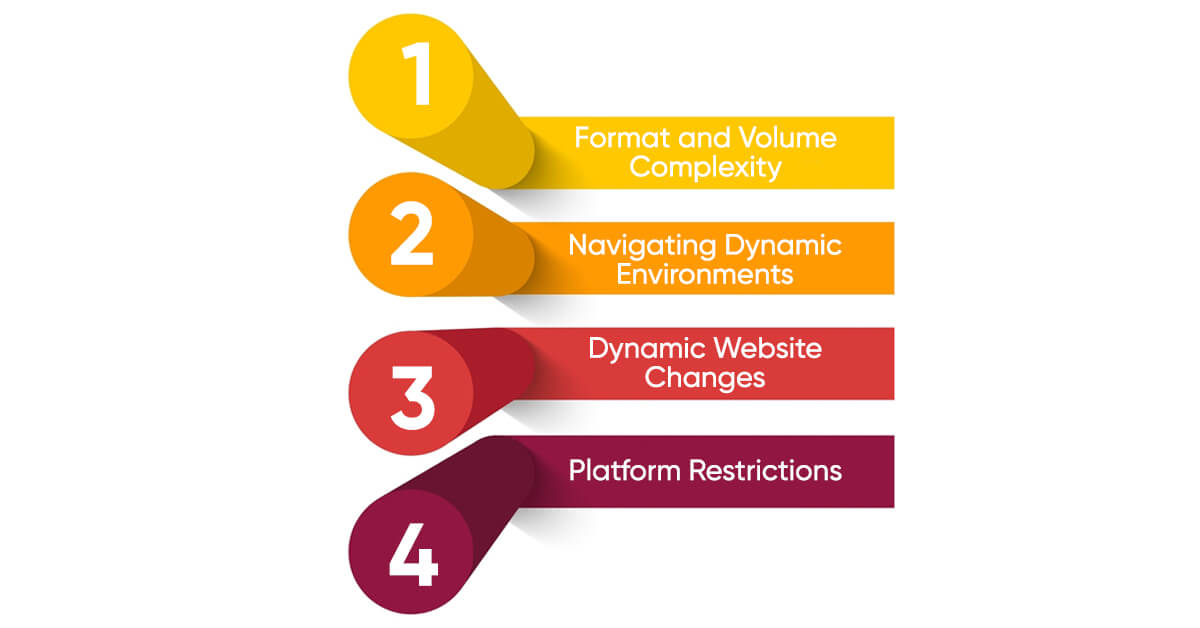

Amazon reviews scraping help businesses in the decision-making process. However, there are several challenges to Web scraping Amazon reviews.

Reviews are a tapestry made of many lengths, languages, and forms. It might be challenging to sort through and organize this wide range of data. In addition, the overwhelming volume of evaluations might overwhelm scraping technologies, making the process of effectively gathering data an enormous problem.

There is constant change within the dynamic virtual geography of online platforms such as Amazon. The ground underneath scraping tools is continuously moving due to the regular revisions made to website design and the reinforcement of security measures. Adaptability is necessary since these changes necessitate ongoing adjustments and recalibrations of scraping techniques. Maintaining smooth data extraction turns into a never-ending struggle that calls for close observation and quick adaptations to get around these always shifting digital landscapes and guarantee the constant flow of data.

Amazon's online world is constantly changing. They often update Amazon's website and add new security measures. This makes it hard for tools to gather information from the site. These changes mess up those tools and make it necessary to adjust them quickly. Adapting to these changes isn't just good. It's essential to keep getting information if it isn't smooth. It needs to keep up and make quick changes; keeping clotting data from Amazon's always-changing website gets tough.

Navigating Amazon's website isn't easy. They're strict about stopping automated scraping, making it hard to get in and collect data. This strictness creates lots of challenges. You must keep changing how you do things to get around their strict security. To succeed, you must keep developing new ways to overcome their barriers. It's a constant back-and-forth where you must be smart and creative to outsmart Amazon's online guards.

Each attempt to breach these restrictions demands ingenuity and adaptability, crafting new approaches to slip through the barricades erected to safeguard data.

The data extraction procedure can be streamlined and the most up-to-date and precise data can be obtained by choosing the appropriate tool for your particular needs. Respecting legal and ethical norms is crucial when it comes to Amazon scraping. To prevent legal implications and account suspension, strictly follow Amazon's terms of service and follow web scraping restrictions. In order to avoid Amazon's servers and reduce the chance of detection, responsible scraping entails putting policies in place, such as rate restrictions. We've already talked about some of the difficulties customers may run into while using Amazon data scraping, like IP limits and CAPTCHAs. By following recommended tactics and best practices by Reviewgators, you may reduce the likelihood of facing these challenges and guarantee that your scraping efforts continue uninterrupted.

Feel free to reach us if you need any assistance.

We’re always ready to help as well as answer all your queries. We are looking forward to hearing from you!

Call Us On

Email Us

Address

10685-B Hazelhurst Dr. # 25582 Houston,TX 77043 USA