Blog / How Web Scraping is Used to Extract Google Reviews using Selenium (Python)

27 January 2022

Web scraping is a useful tool, but can also lead to ethical and legal ambiguity. To begin with, a website might receive lots of applications each second. Browsing at such amazing speeds is bound to attract attention. Such large amounts of requests are likely to clog up a website's servers and, under extreme situations, might be considered a denial-of-service assault. Similarly, any webpage that requires a login may include information that is not regarded as public as a result, and scraping such web pages might put you in legal trouble.

A developer may be required to deal with Google (Map) Reviews in a variety of situations. Anyone acquainted with the Google My Business API knows that getting reviews using the API requires an accountId for every location(business). Scraping reviews may be quite useful in situations when a developer wants to work with evaluations from several sites (or does not have access to a Google business account).

This blog will show you how Selenium and BeautifulSoup are used to Scrape Google reviews.

The technique of obtaining data from web pages is known as web scraping. Various libraries that may assist you with this, including:

Beautiful Soup is a great tool for parsing the DOM, as it simply extracts data from HTML and XML files.

Scrapy is an open-source program that allows you to scrape huge databases at scale.

Selenium is a browser automation tool that aids in the interaction of JavaScript in scraping tasks (clicks, scrolls, filling in forms, drag, and drop, moving between windows and frames, etc.)

Here we will scrape the page with Selenium, add more content, and then parse the HTML file with Beautiful Soup.

#Installing with pip pip install selenium#Installing with conda conda install -c conda-forge selenium

To interact with the chosen site, Selenium needs a driver.

We will analyze the HTML page with BeautifulSoup and retrieve the data you need.

To install BeautifulSoup

#Installing with pip pip install beautifulsoup4#Installing with conda conda install -c anaconda beautifulsoup4

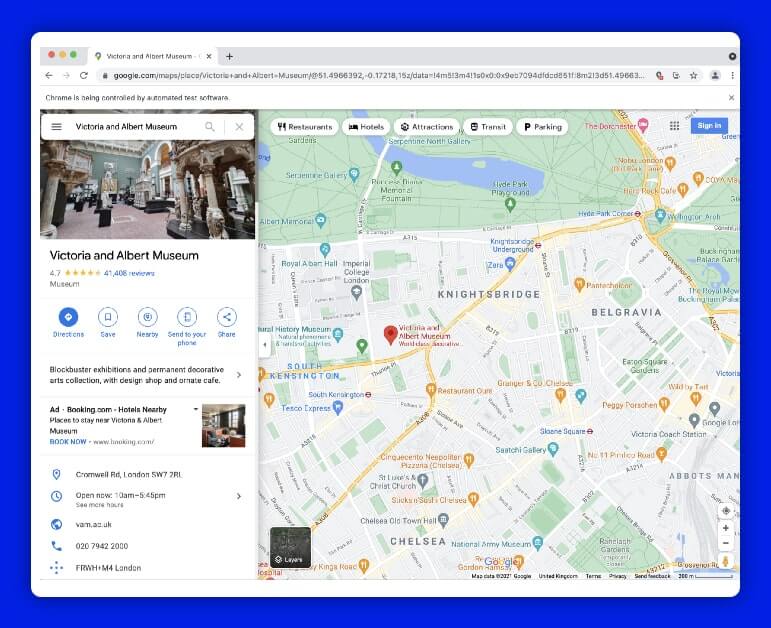

Install and initialize the web driver, that's all there is to it. Then you will send the Google Maps URL of the place we wish to receive reviews for to the get method:

from selenium import webdriver driver = webdriver.Chrome()#London Victoria & Albert Museum URL url = 'https://www.google.com/maps/place/Victoria+and+Albert+Museum/@51.4966392,-0.17218,15z/data=!4m5!3m4!1s0x0:0x9eb7094dfdcd651f!8m2!3d51.4966392!4d-0.17218' driver.get(url)

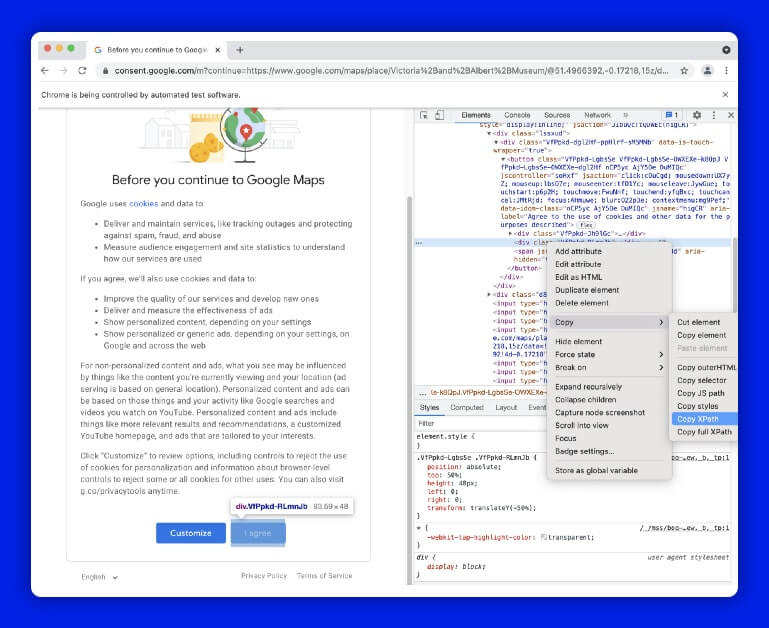

Before going to the real web address, we specified through URL variable, the web driver is quite likely to come across the permission Google page to accept to cookies. If that's the case, you may proceed by clicking the "I agree" option.

Selenium provides numerous ways; in this case, I used find element_by_xpath()

driver.find_element_by_xpath('//*[@id="yDmH0d"]/c-wiz/div/div/div/div[2]/div[1]/div[4]/form/div[1]/div/button').click()#to make sure content is fully loaded we can use time.sleep() after navigating to each page

import time

time.sleep(3)

Here is when things will be complicated. The URL in the example will take you to the précised website and you will click the 41,408 reviews button to load the reviews.

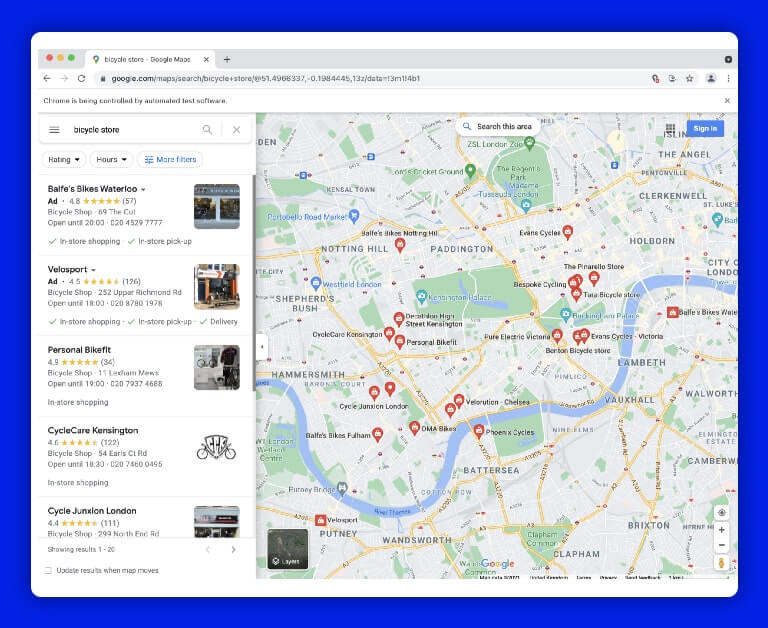

On Google Maps, however, there are various sorts of profile pages, and in many cases, the location will be presented with a several other locations on the left side, and there will almost certainly be some adverts at the top of the list. The following URL, for example, will lead us to this page:

You will develop an error handler code and go to load reviews so you will not get caught on various sorts of layouts or load the wrong page.

URL = 'https://www.google.com/maps/search/bicycle+store/@51.5026862,-0.1430242,13z/data=!3m1!4b1'

try:

driver.find_element(By.CLASS_NAME, "widget-pane-link").click()

except Exception:

response = BeautifulSoup(driver.page_source, 'html.parser')

# Check if there are any paid ads and avoid them

if response.find_all('span', {'class': 'ARktye-badge'}):

ad_count = len(response.find_all('span', {'class': 'ARktye-badge'}))

li = driver.find_elements(By.CLASS_NAME, "a4gq8e-aVTXAb-haAclf-jRmmHf-hSRGPd")

li[ad_count].click()

else:

driver.find_element(By.CLASS_NAME, "a4gq8e-aVTXAb-haAclf-jRmmHf-hSRGPd").click()

time.sleep(5)

driver.find_element(By.CLASS_NAME, "widget-pane-link").click()

It should lead us to the review page. However, the initial load would only deliver 10 reviews, and the subsequent scroll would bring another ten. We'll calculate how many times we'll have to browse and employ the chrome drivers execute_script () function to obtain all of the reviews for the location.

#Find the total number of reviews

total_number_of_reviews = driver.find_element_by_xpath('//*[@id="pane"]/div/div[1]/div/div/div[2]/div[2]/div/div[2]/div[2]').text.split(" ")[0]

total_number_of_reviews = int(total_number_of_reviews.replace(',','')) if ',' in total_number_of_reviews else int(total_number_of_reviews)#Find scroll layout

scrollable_div = driver.find_element_by_xpath('//*[@id="pane"]/div/div[1]/div/div/div[2]')#Scroll as many times as necessary to load all reviews

for i in range(0,(round(total_number_of_reviews/10 - 1))):

driver.execute_script('arguments[0].scrollTop = arguments[0].scrollHeight',

scrollable_div)

time.sleep(1)

dsfdsfd

The scrollable element is initially found inside the for loop above. The scroll bar is initially located at the top, which implies the vertical placement is 0. We adjust the vertical position(.scrollTop) of the scroll element(scrollable_div) to its height by giving a simple JavaScript snippet to the chrome driver(driver.execute_script) (.scrollHeight). The scroll bar is being dragged vertically from point 0 to position Y. (Y = any height you choose).

Our driver navigated towards the end of the reviews page and downloaded all of the accessible review pieces. You can now analyze them and get the information we need. (Reviewer, Review Text, Review Sentiment, and so on.) To do so, look for the same class name as the outermost item displayed inside the scroll layout, which holds all the information about individual reviews, and use it to extract a list of reviews.

response = BeautifulSoup(driver.page_source, 'html.parser')

reviews = response.find_all('div', class_='ODSEW-ShBeI NIyLF-haAclf gm2-body-2')

We can now construct a function to get important data from the HTML-parsed reviews result set. The code below would accept the answer set and provide a Pandas DataFrame with pertinent extracted data in the Review Rate, Review Time, and Review Text columns.

def get_review_summary(result_set):

rev_dict = {'Review Rate': [],

'Review Time': [],

'Review Text' : []}

for result in result_set:

review_rate = result.find('span', class_='ODSEW-ShBeI-H1e3jb')["aria-label"]

review_time = result.find('span',class_='ODSEW-ShBeI-RgZmSc-date').text

review_text = result.find('span',class_='ODSEW-ShBeI-text').text

rev_dict['Review Rate'].append(review_rate)

rev_dict['Review Time'].append(review_time)

rev_dict['Review Text'].append(review_text)

import pandas as pd

return(pd.DataFrame(rev_dict))

This blog provided a basic introduction to web scraping using Python as well as a simple example. Data sourcing might necessitate the use of complicated data collection techniques, and without the correct tools, it can be a time-consuming and costly task.

If you are looking to Scrape Google Reviews using Python and Selenium, contact ReviewGator, today!

Request for a quote!

Feel free to reach us if you need any assistance.

We’re always ready to help as well as answer all your queries. We are looking forward to hearing from you!

Call Us On

Email Us

Address

10685-B Hazelhurst Dr. # 25582 Houston,TX 77043 USA