Blog / How Web Scraping Is Used To Extract Book Ratings Data Using Beautifulsoup

16 May 2022

Web scraping is the method of fetching huge data from different websites and then saving the same in the required format. Manual copy and pasting data consumes both time and energy so mechanized web scraping is the best option. And when there is a need of large-scale data, it becomes impossible to scrape data manually. We use different software to automate the process and one such program is BeautifulSoup.

BeautifulSoup is a Python Web Scraping package for extracting data from XML and HTML files and interpreting them.

The reader's rating of a book is given in stars from highest (5) to lowest (0). It can be in the following forms:

0 0.5 1 1.5 2 2.5 3 3.5 4 4.5 5

A book review is a written view about a book by a reader. Reviews are always attached with ratings, which means that anyone who submits a review must also give a rating. Here also rating refers to both review and rating.

Reviews fetched from 890 books. 18,875 scraped URL 56,788 reviews 183,840 ratings

Let us discuss the step-by-step process for extracting book ratings data.

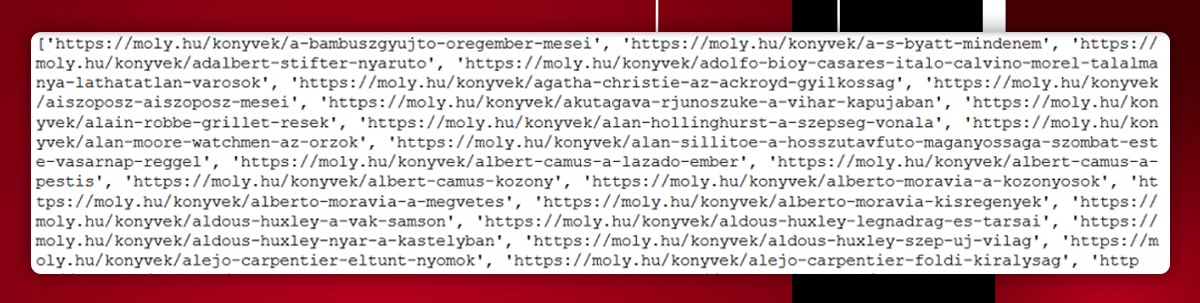

We need to get every book's URL from the list first.

It was easy to get the URLs and save them to book urls with a simple while loop:

book_urls = []

page = 1

while page != 45:

result = requests.get(f"https://moly.hu/listak/1001-konyv-kituntetes?page={str(page)}")

time.sleep(1)

src = result.content

soup = BeautifulSoup(src, "lxml")

all_a = soup.find_all("a", class_="fn book_selector")

book_urls.extend(["https://moly.hu" + a["href"] for a in all_a])

page += 1

To get book URLs and extract data from pages, BeautifulSoup and Requests is used.

There is time.sleep(1) is required in the code for some safety reasons, This prevents code from running for 1 second and stops overburden to the web server.

Here, all 890 book URLs in the book urls list when this code was already executed:

After gathering all the books' URLs, I could proceed with the analysis by using a for loop in book URLs.

The following information was kept after making a dictionary for each book:

For the better idea, here's what the book dictionary looked like after the loop iteration:

{

'title': 'A bambuszgyűjtő öregember meséi',

'url': 'https://moly.hu/konyvek/a-bambuszgyujto-oregember-mesei',

'ratings': [5.0, 5.0, 4.5, 5.0, 5.0, 5.0, 4.0, 4.5, 4.5, 4.0, 3.5, 4.0, 4.5, 5.0, 3.0, 4.5, 5.0, 4.0, 4.0, 5.0, 5.0, 4.0, 4.5, 4.5, 5.0, 5.0, 5.0, 5.0, 4.5, 4.0, 4.0, 0, 4.0, 5.0, 5.0, 5.0, 5.0, 3.0, 4.5, 4.0, 3.5, 4.0, 5.0, 4.5, 4.0, 4.0, 4.5, 5.0, 4.0, 4.5, 5.0, 4.0, 4.5, 4.5, 5.0, 4.0, 4.5, 3.0, 5.0, 5.0, 4.5, 4.0, 5.0, 4.0, 3.5],

'reviews': [5.0, 5.0, 5.0, 5.0, 5.0, 4.0, 4.5, 5.0, 4.5, 5.0, 4.0, 4.0, 5.0, 5.0, 4.0, 4.5, 5.0, 5.0, 5.0, 4.5, 0, 5.0, 5.0, 5.0, 3.0, 4.0, 5.0, 4.0, 4.0, 5.0, 4.5, 5.0, 4.5, 4.0, 3.0, 5.0]

}

Append for books was made before a FOR loop after dictionary creation to have access to all the books.

All to be known is that book dictionary is created for all 890 books. So the list of books and review pages is made and now star values dictionary:

books = []

review_pages = []

star_values = {

"80" : 5.0,

"72" : 4.5,

"64" : 4.0,

"56" : 3.5,

"48" : 3.0,

"40" : 2.5,

"32" : 2.0,

"24" : 1.5,

"16" : 1.0,

"8" : 0.5,

"0" : 0

}

We need books to keep track of each book received after each loop iteration. Here, review pages are not taken into consideration right now as it was only required for the backup. It is not affordable to lose the URLs collected so every review page URL is mentioned here.

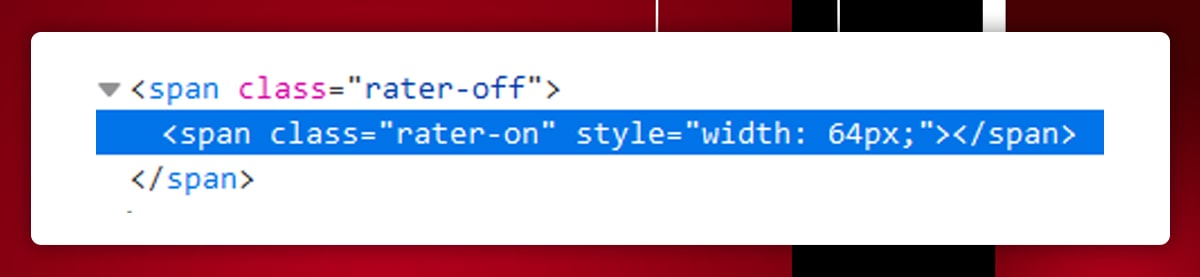

Look at this screenshot from the source code of a review page to see what star values was used for:

Pixels width is used to specify rating. A 5.0 rating is 80px wide, a 4.5 rating is 72px wide, a 4.0 rating is 64px wide, and so on.

BeautifulSoup generates value in pixel width so here we have used star values. These values get converted to actual rating using HTML. For example, 80px to 5-star rating.

star_value = star_values[review.find("span", class_="rater-on")["style"].split(" ", 1)[1].split("px;", 1)[0]]

So now FOR loop has book dictionary, book reviews and book ratings list:

book = {}

book_ratings = []

book_reviews = []

The codes were made before FOR loop,

result = requests.get(book_url)

src = result.content

soup = BeautifulSoup(src, "lxml")

book["title"] = soup.find("h1").find("span").get_text().rstrip().replace("\u200b", "")

book["url"] = book_url

After that, I copied the URL for the book's initial review page into the soup variable:

page = 1

result = requests.get(f"{book_url}/ertekelesek?page={str(page)}")

time.sleep(1)

src = result.content

soup = BeautifulSoup(src, "lxml")

Next, we used a try or except or block to check if the book had more than one review page, this was important as new code was created “BLOCK”.

try:

last_page = int(soup.find("a", class_="next_page").previous_sibling.previous_sibling.get_text())

If there is one page or page, try is shown and block is run if there are more than 1 page.

except AttributeError:

url = f"{book_url}/ertekelesek?page={str(page)}"

reviews = soup.find_all("div", id=re.compile("^review_"))

if len(reviews) != 0:

review_pages.append(url)

for review in reviews:

star_value = star_values[review.find("span", class_="rater-on")["style"].split(" ", 1)[1].split("px;", 1)[0]]

book_ratings.append(star_value)

if review.find("div", class_="atom"):

book_reviews.append(star_value)

Execute block code for more than 1 page

else:

while page != last_page + 1:

url = f"{book_url}/ertekelesek?page={str(page)}"

review_pages.append(url)

result = requests.get(url)

time.sleep(1)

src = result.content

soup = BeautifulSoup(src, "lxml")

reviews = soup.find_all("div", id=re.compile("^review_"))

for review in reviews:

star_value = star_values[review.find("span", class_="rater-on")["style"].split(" ", 1)[1].split("px;", 1)[0]]

book_ratings.append(star_value)

if review.find("div", class_="atom"):

book_reviews.append(star_value)

page += 1

The end effect was the same in both cases, the review pages were added to the review pages’ list, and the ratings with reviews were added to book ratings and book reviews, respectively.

After the for loop was executed, book was finalized with book ratings and book reviews data attached to books, a list that comprised all books:

book["ratings"] = book_ratings book["reviews"] = book_reviews books.append(book)

Now as per the example we have 890 book dictionaries list with URL, title, review and ratings for each book.

The next stage was simple, kept two different lists for each book's rating and rating with review:

all_reviews = []

all_ratings = []

for book in books:

all_ratings.extend(book["ratings"])

all_reviews.extend(book["reviews"])

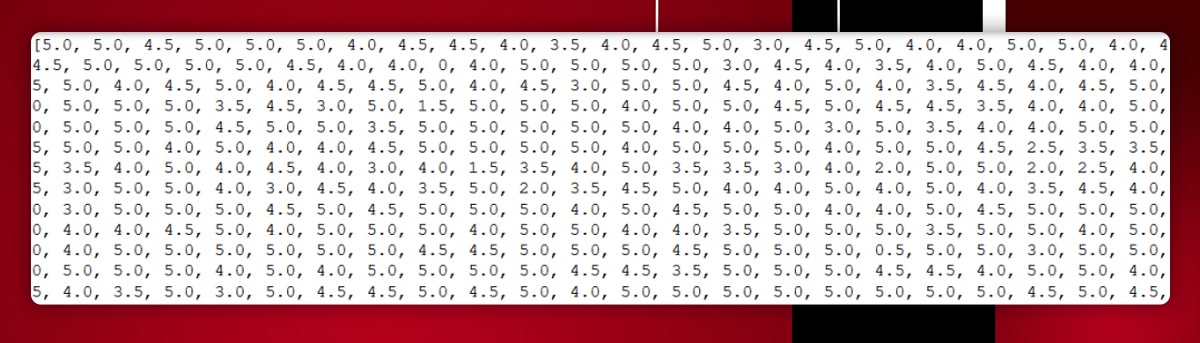

If you print reviews and ratings it looks like as follows:

All reviews have 56,788 ratings with reviews and all ratings have 183,840 ratings.

Counter() is used to count the number of times the ratings appeared in all ratings and all reviews, then used the dict() method to convert the data to dictionaries:

c_all_ratings = Counter(all_ratings) c_all_reviews = Counter(all_reviews) all_ratings_dict = dict(c_all_ratings) all_reviews_dict = dict(c_all_reviews)

The output seems like shown below:

{5.0: 76783, 4.5: 31517, 4.0: 35901, 3.5: 15286, 3.0: 11813, 0: 2237, 1.5: 945, 2.5: 3800, 2.0: 3261, 0.5: 983, 1.0: 1238}

This indicates that, there were 77,783 ratings with a star value of 5.0, 32,519 ratings with a star value of 4.5, and so on.

The more important thing is the dataframes is generated based on all reviews and all ratings which is useful for visualizations.

I used the following code to build the initial dataframes:

d = {"Rating" : [5.0, 4.5, 4.0, 3.5, 3.0, 2.5, 2.0, 1.5, 1.0, 0.5, 0.0], "# of ratings" : [76783, 31517, 35901, 15286, 11813, 3800, 3261, 945, 1238, 983, 2237], "# of ratings with reviews" : [23509, 9200, 9818, 4258, 3852, 1393, 1256, 389, 494, 440, 2173]}

df = pd.DataFrame(data=d)

df["# of ratings with reviews / # of ratings"] = df["# of ratings with reviews"] / df["# of ratings"]

df

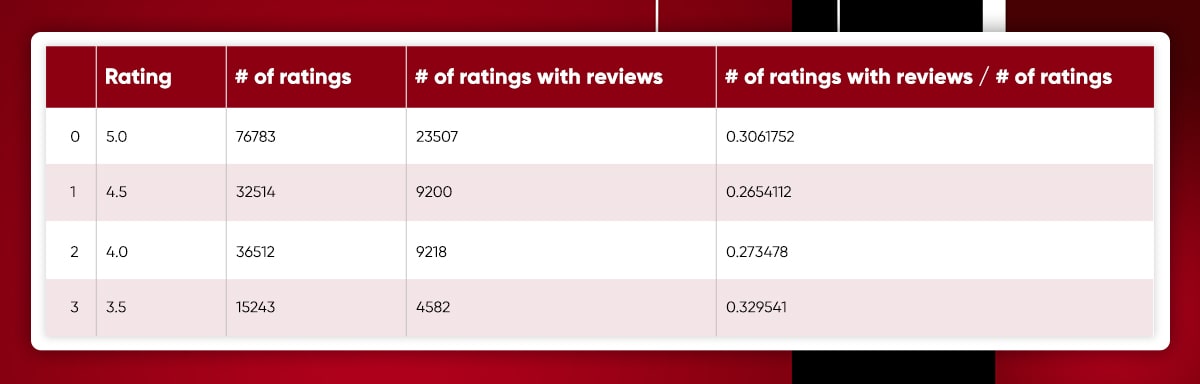

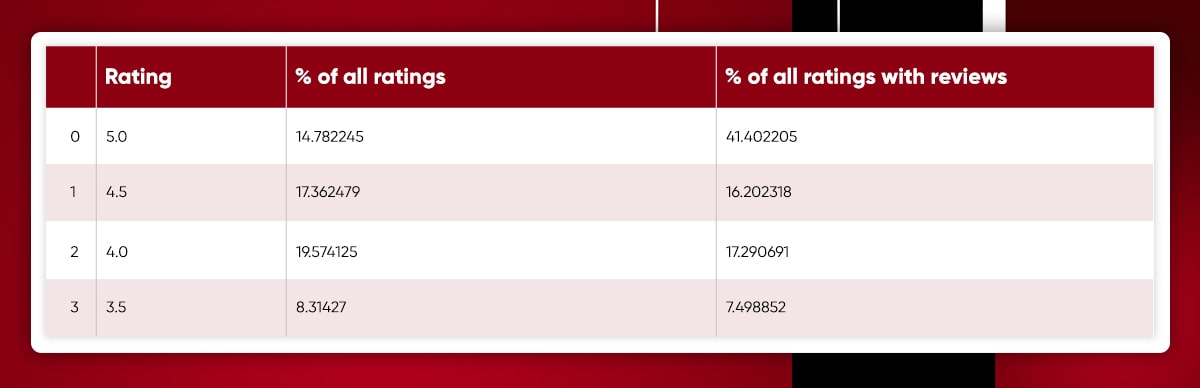

Dataframe shown below:

The Rating column displays the possible ratings values in decreasing order i.e., from 5.0 to 0.0.

The # of ratings column indicates how many ratings each rating has for example, there are 2,237 ratings with the value of 0.0.

The total number of ratings and reviews is shown in the column for example, there are 2,173 ratings with a review value of 0.0.

The # of ratings with reviews / # of ratings column displays what percentage of all ratings contain a review for each rating for example, around 30% of all 5.0 ratings feature a review, implying that 70% of 5.0 ratings do not contain a review.

As the value of the Rating declines, the ratings become more negative, people tend to submit more reviews which is seen by the increase in the number of reviews / number of ratings.

The dataframe made previously was used by introducing 2 more variables, number of all ratings, which we already know is 183,840, and number of all ratings with reviews, which is 56,788.

number_of_all_ratings = df["# of ratings"].sum() number_of_all_ratings_with_reviews = df["# of ratings with reviews"].sum()

Some more calculations with new variables:

df.insert(2, "% of all ratings", df["# of ratings"] / number_of_all_ratings * 100) df.insert(4, "% of all ratings with reviews", df["# of ratings with reviews"] / number_of_all_ratings_with_reviews * 100)

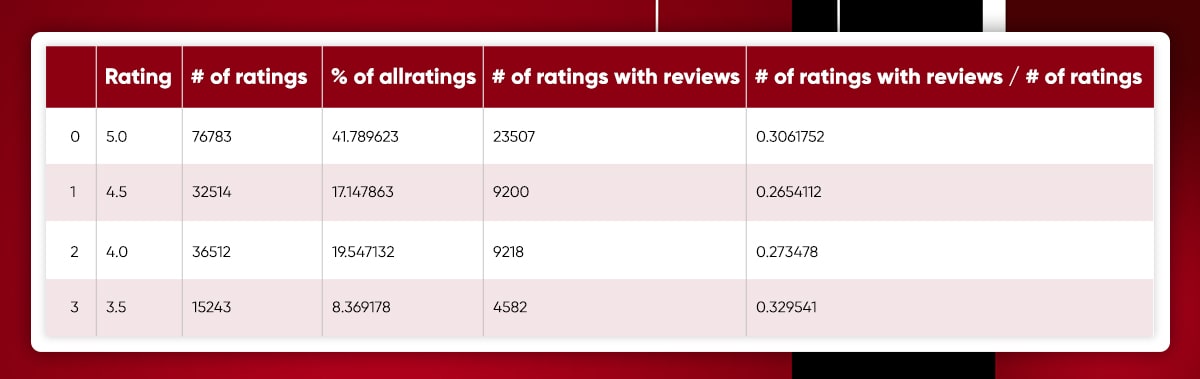

The output with 2 new variables:

The % of all ratings column displays what proportion of each Rating from all ratings. For example, 3.0 accounts for 6.43 % of all ratings.

The % of all ratings with reviews indicates for each Rating what percentage of all ratings with reviews. For example, 4.0 accounts for 17.29 percent of all ratings with reviews.

Now you can see the data after creating the above dataframes.

Primarily let us see how the number of ratings compared to the number of reviews in my dataframe and this column shows for each rating what percentage of all ratings contains a review.

For charting the data, the following code is used:

plt.figure(figsize=(10, 6))

plt.title("Reviews / ratings by star rating")

plt.xticks(np.arange(0, 5.5, step=0.5))

plt.scatter(df["Rating"], df["# of ratings with reviews / # of ratings"])

plt.plot(df["Rating"], df["# of ratings with reviews / # of ratings"])

plt.xlabel("Rating")

plt.ylabel("Percentage")

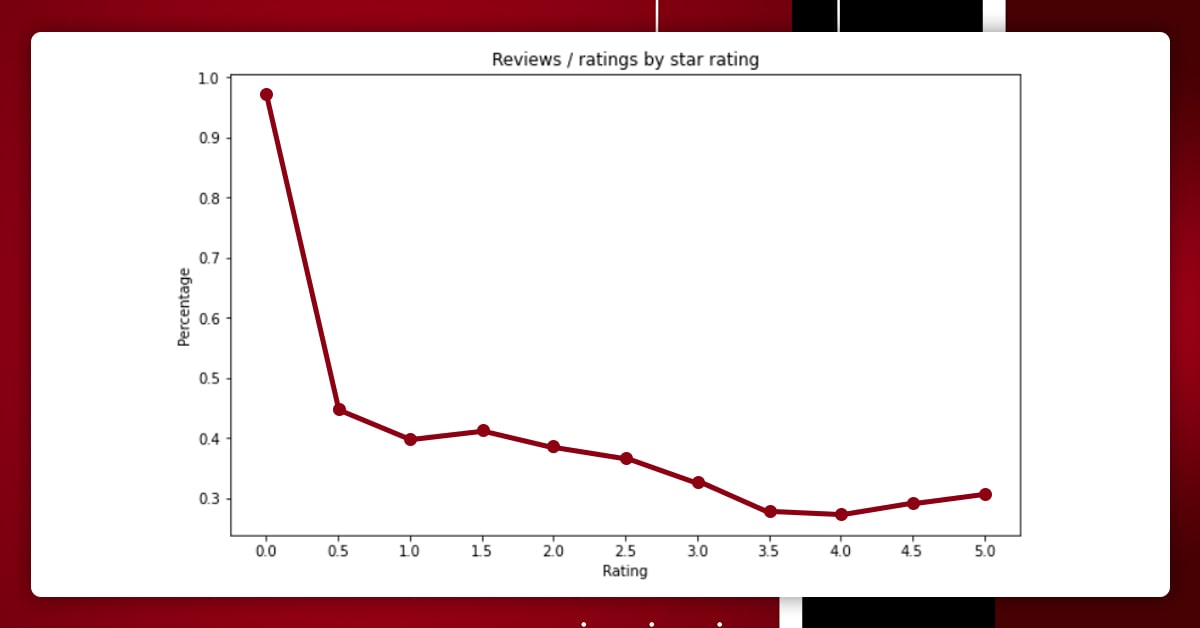

The plot can be seen like this,

The graph clearly displays which rating corresponds to the most reviews presented in %. It can be observed that when people have a negative experience with a book, they are more likely to leave a remark and their rating is 2.5 or lower.

Sometimes it is also seen that book having 0-star rating can have both positive and negative reviews.

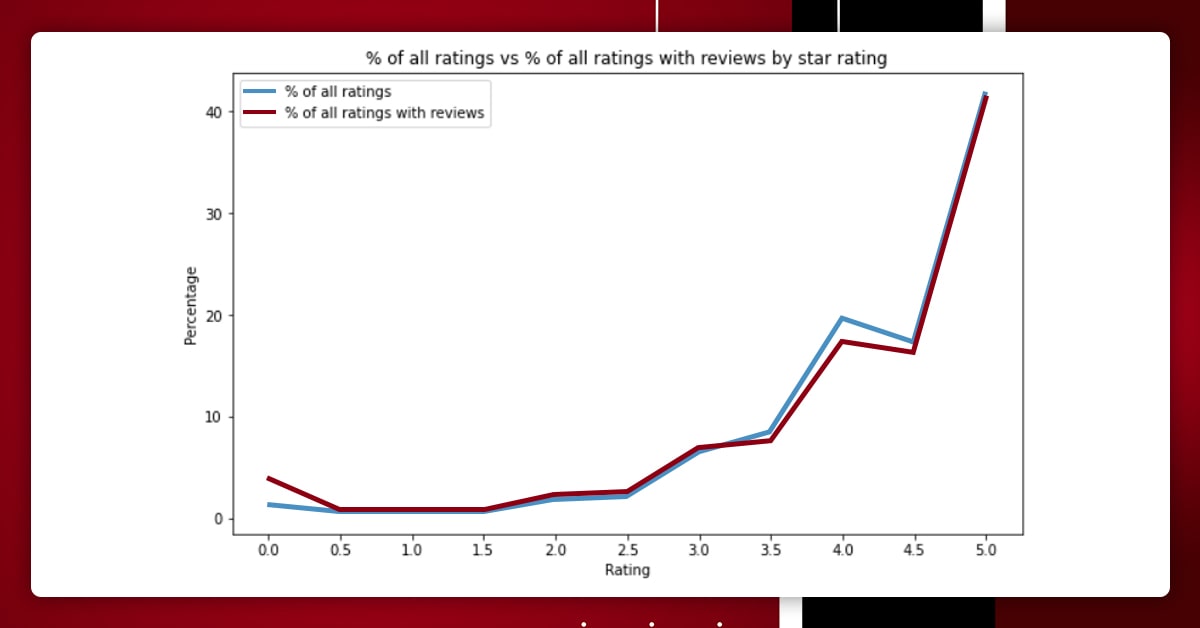

A new chart is also shown for more clarity.

The distribution of the reviews and the ratings are not the same in this graph. When we compare the percent of all ratings with reviews, we may conclude that lower ratings are usually between 3.0 to 0 and acquire reviews compared to the number of all ratings.

Perhaps the dataframe version of this information is more useful:

Code used to plot the chart:

y = df["% of all ratings"]

y2 = df["% of all ratings with reviews"]

x = df["Rating"]

fig = plt.figure(figsize=(10, 6))

ax = plt.subplot(111)

ax.plot(x, y, label="% of all ratings")

ax.plot(x, y2, label="% of all ratings with reviews")

plt.title("% of all ratings vs % of all ratings with reviews by star rating")

plt.xticks(np.arange(0, 5.5, step=0.5))

plt.xlabel("Rating")

plt.ylabel("Percentage")

ax.legend()

plt.show()

Naturally, the conclusions must be statistically confirmed.

Naturally, the conclusions of my investigation needed to be statistically confirmed. The data analysts suggested to randomly pick some books from 890 and perform thr same analysis and compare the same with original output.

If this process is repeated 10 times and get the same output pretends that the final result obtained is good enough and conclusions can be derived from it.

This is done here by randomly taking sample of 400 books with the function of randomize().

Every randomise() function generated a dataframe, which defines lists with the sample's value of ratings with reviews / of ratings for each Rating. Each list was assigned a rating value for example, "4.0" and contained the corresponding values. printed the findings of each list after the ten random samplings to see if the original sample of 890 books was large enough.

Here's an example of a dataframe returned by randomise():

The below stated is the code:

five = []

four_and_a_half = []

four = []

three_and_a_half = []

three = []

two_and_a_half = []

two = []

one_and_a_half = []

one = []

half = []

zero = []

def randomize():

sample_books = random.sample(books, k=400)

sample_ratings = []

sample_reviews = []

for book in sample_books:

sample_ratings.extend(book["ratings"])

sample_reviews.extend(book["reviews"])

ratings_counted = dict(Counter(sample_ratings))

reviews_counted = dict(Counter(sample_reviews))

d = {"Rating" : [5.0, 4.5, 4.0, 3.5, 3.0, 2.5, 2.0, 1.5, 1.0, 0.5, 0.0], "# of ratings" : [ratings_counted[5.0], ratings_counted[4.5], ratings_counted[4.0], ratings_counted[3.5], ratings_counted[3.0], ratings_counted[2.5], ratings_counted[2.0], ratings_counted[1.5], ratings_counted[1.0], ratings_counted[0.5], ratings_counted[0]], "# of ratings with reviews" : [reviews_counted[5.0], reviews_counted[4.5], reviews_counted[4.0], reviews_counted[3.5], reviews_counted[3.0], reviews_counted[2.5], reviews_counted[2.0], reviews_counted[1.5], reviews_counted[1.0], reviews_counted[0.5], reviews_counted[0]]}

df = pd.DataFrame(data=d)

df["# of ratings with reviews / # of ratings"] = df["# of ratings with reviews"] / df["# of ratings"]

v = df["# of ratings with reviews / # of ratings"]

five.append(round(v[0], 4))

four_and_a_half.append(round(v[1], 4))

four.append(round(v[2], 4))

three_and_a_half.append(round(v[3], 4))

three.append(round(v[4], 4))

two_and_a_half.append(round(v[5], 4))

two.append(round(v[6], 4))

one_and_a_half.append(round(v[7], 4))

one.append(round(v[8], 4))

half.append(round(v[9], 4))

zero.append(round(v[10], 4))

return df

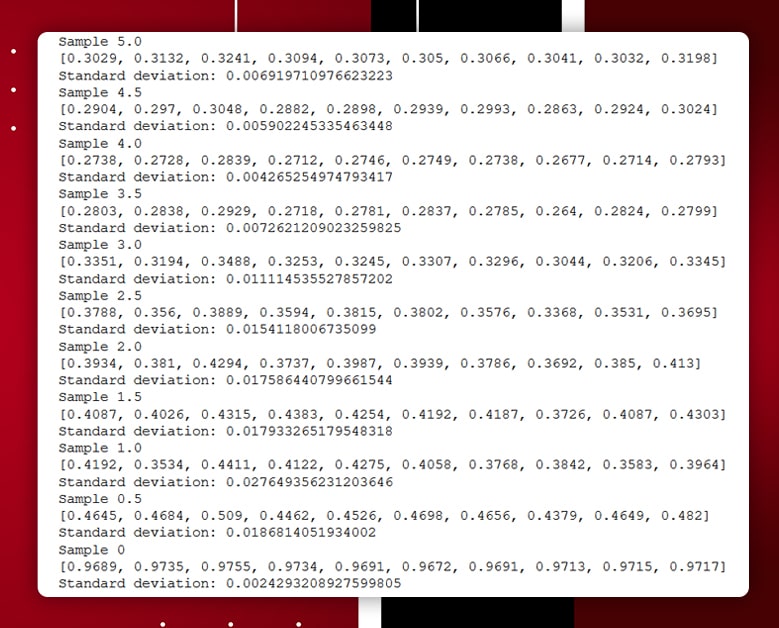

randomize() done almost 10 times and the output is shown below:

For each rating value, samples are displayed for example, "Sample 5.0" signifies 10 examples for a rating of "5.0".

The list values for example, "0.3029" are the ratio of of ratings with reviews / of ratings, which were generated 10 times using randomise().

Because the standard deviation indicates that the difference between the values is less, the sample of 890 books can be regarded sufficient, and the conclusion that people are more inclined to submit a review if their rating of a book is unfavorable may be accepted.

It can be concluded that the example used here is sufficient and results are generated easily. When a person has bad experience reading a book they will give rating between 3-0 and also submit a review. Nothing can be guaranteed as the ratings and review are posted on daily basis and to stay updated daily research is the only key. Use the web scraping services of ReviewGators and assist yourself with major analysis and scraping tasks.

Looking for web scraping of book ratings with BeautifulSoup? Contact ReviewGators today!

Feel free to reach us if you need any assistance.

We’re always ready to help as well as answer all your queries. We are looking forward to hearing from you!

Call Us On

Email Us

Address

10685-B Hazelhurst Dr. # 25582 Houston,TX 77043 USA