Blog / Which are the Popular Methods of Data Scraping in Data Analytics?

28 December 2022

Gathering data from websites and other online sources is known as "data scraping." Suppose you collect information about your competitors and your clients. Data scraping can help you update your products and give valuable insights into your company's and competitors' success. Users can either manually scrape or use software to scrape information based on their request and the volume of information.

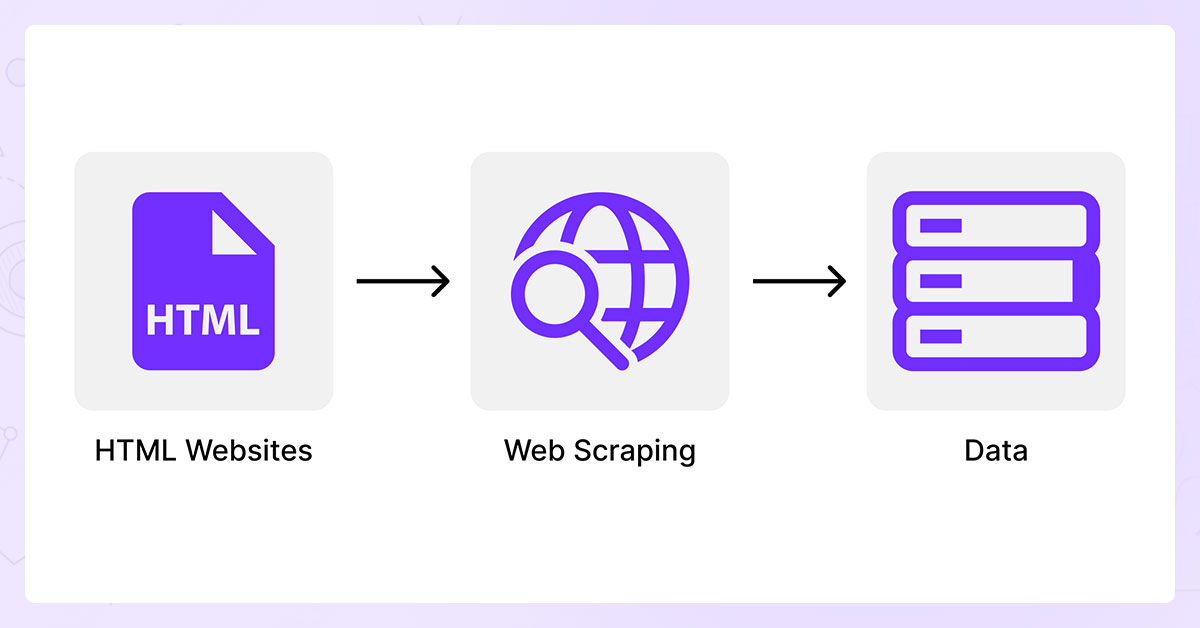

Data extraction from a program's output is known as data scraping. Its most popular application is web scraping, in which a scraper extracts data from a webpage.

Some applications are relatively benign, but there is also a dark side. It is possible to obtain protected texts, photos, and movies via tools. These technologies break copyright and intellectual property (IP) laws.

It's a workaround because some businesses strive to prevent content from being stolen or used for unauthorized reasons. They might require users to sign up, subscribe, or pay before they get full access to the information.

Whatever the cause, these businesses employ access and permission controls and other techniques to stop the data from being exposed through a simple API. Data Scraping helps to avoid such situations.

Computer programs called web crawlers or spiders comb through websites. Using them, you can locate data for a website's main page. Many web crawlers are available, each with a unique method of extracting content from websites. An illustration would be looking for neighborhood eateries to add to a food delivery service like Seamless or Grubhub.

Fortunately, you can start from scratch when creating a web crawler. Several businesses have already taken care of much of the work for you. For instance, Yandex, a well-known Russian search engine, has its web crawler. It supports various data extraction formats, including JSON, and can extract information from websites in multiple languages.

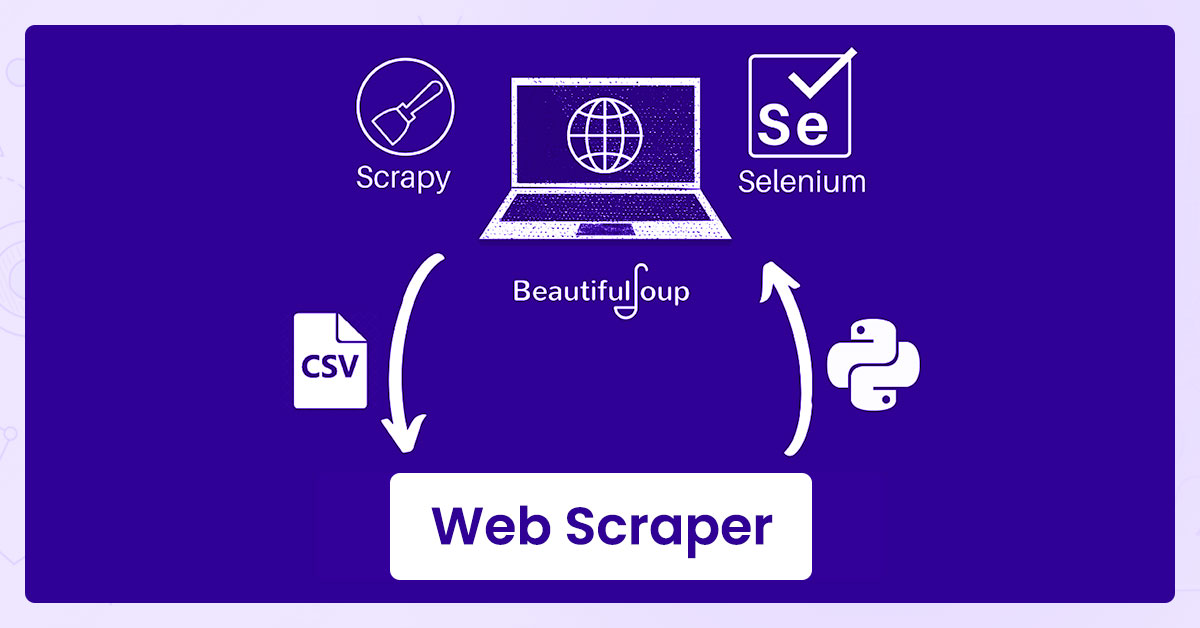

A Common technique for obtaining data from websites is web scraping. You may quickly and easily scrape through online sites using one of the various web-based screen scrapers available without much coding experience. For data extraction purposes, these screen scrapers are helpful. One such screen scraper for gathering information from websites like YouTube, Twitter, etc., is DataScraper.

One can configure screen scrapers to harvest data from various websites, save it as different files, or dump it into a database. These web-based screen scrapers are simple and don't need prior programming experience with languages like Python, R, etc. Furthermore, these do not require any server configuration. You can use them for data extraction by simply copying and pasting the commands these screen scrapers supply on your terminal or command prompt.

Connecting to a database is the oldest method of obtaining data. It is simple to collect different data sets and integrate them into a single table that can be studied as a whole using data aggregation tools like SQL, Hive, Pig, etc. There are libraries for almost every language that make connecting to your database and accessing its data easy if you're taking data from a relational database (like MySQL). Just be careful not to access someone else's databases without their permission.

Working with non-relational databases, such as the increasingly popular MongoDB, makes things a little more challenging. Although libraries to access these databases still exist, they need to be more well-developed and give full access to all data in a database. With your users' consent, getting what you need can be easy. Some scraping solutions, like NodeXL, only allow relational data sets. Consider other options if your data does not fit that format.

Using one or more APIs or application programming interfaces is the easiest way to gather data from various sources. Because it has nothing to do with learning to code, the phrase could be unclear. Data gathering is essential to the situation. In other words, you can link one program (like Google Drive) with another by using an API (such as your spreadsheet). This way, you can access all kinds of data stored in different places.

Web scraping is another helpful method for data collection. Web scraping is performed by gaining access to data from a website and saving it in a personal database or spreadsheet. With web scraping technologies, you can write scripts to extract specific data from a website.

You can obtain product details like prices and descriptions if you want to scrape data from an e-commerce website. It is common to use web scraping and API integration in similar situations. Both are perfectly functional, yet they both have advantages and disadvantages of their own. Web scraping is quicker but takes more code, whereas APIs are often faster but require less integration. Therefore, if speed is your top need, you might lean toward employing a web scraper for the time being; however, if long-term access is more crucial, you can use an API. E-commerce sites frequently publish their open APIs there or through other third-party tools (like ProgrammableWeb).

Several large e-commerce platforms provide APIs, including Amazon and eBay., but not all of them Such APIs are provided by several large e-commerce platforms like Amazon and eBay, but not all of them. However, you may get some information from numerous e-commerce websites via a variety of third-party APIs without using web scraping. For instance, BigML provides a free, open-source API that enables you to access information on more than 1 million products from various well-known online stores, like Macy's and Best Buy. They are much more accessible as well, so it could be worthwhile to conduct a quick Google search to check if your preferred merchant is among them.

Content: A scraper may copy or repurpose content from another website instead of authoring their own. For example, bots seek material to increase search engine optimization.

Reviews: Websites like Airbnb and Yelp take considerable pains to collect client feedback. Such content might be captured and replicated on other websites by some scraper bots.

Pricing: Posting prices causes a lot of businesses to hesitate. If they publish their prices, rival businesses will undercut them. Consequently, a specialized scraper searches the internet for content linked to prices.

Contacts: To complete its mission, it needs reliable phone numbers and email addresses. Contact scrapers search websites for any plain-text contact information. They search through mailing lists, employee directories, about us pages, and other websites.

Older programs: There are several old programs written in difficult-to-access computer languages. It is possible to convert data into a format that is easier to work with using tools.

Videos: Some videos on websites like YouTube create their content by scraping. Similarly, to this, website images are scraped and used in the video. You can create Video voice-overs from scraped content.

Forms: Some bots can even use JavaScript to complete forms on websites to access gated content quickly.

The list of some top providers of data scraping tools includes:

Contact ReviewGator for any web scraping services today!

Request for a quote!

Feel free to reach us if you need any assistance.

We’re always ready to help as well as answer all your queries. We are looking forward to hearing from you!

Call Us On

Email Us

Address

10685-B Hazelhurst Dr. # 25582 Houston,TX 77043 USA